SFTP as a protocol for exchanging data across enterprises refuses to die.

No matter how much you may want this ancient protocol to just fade away, it’s very unlikely to — probably because it’s the lowest common denominator among enterprises.

But SFTP is just a protocol; it simply moves data between a client and a server. There’s always a business process or workflow that is associated with the sending or receiving of data that a protocol is unequipped to handle.

To address workflow requirements managed file transfer, or MFT, builds upon the “raw” protocol to provide support for workflow before and after it is sent and/or received. Here’s my definition of MFT:

Managed file transfer is a set of workflow definition and execution tools that are integrated with file transfer protocols to accomplish end-to-end business processes reliably and securely.

Common products in this area include Globalscape and MOVEit. The limitations of these enterprise-class MFT systems have become painfully evident in the last couple of years. They’re big, expensive and dangerously insecure.

Given the cost and risk of old-school MFT, the question is, “How does an enterprise create a secure, native MFT environment using SFTP in the cloud?”

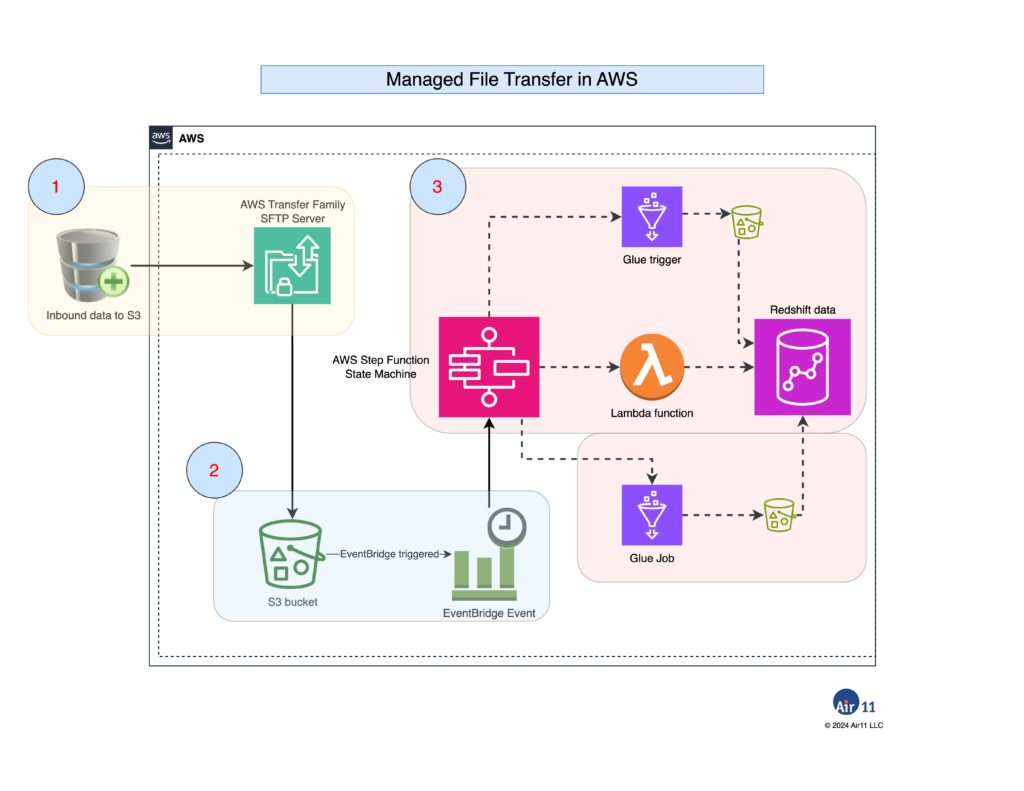

Below, I outline a cloud-native, event-driven design using orchestration of AWS services. Using a design like this, you can ingest data from multiple sources using SFTP, transform data using extract, transform and load (ETL) services and then store it in a data lake.

I’ll get into the nitty-gritty of the design below in future posts. If you are interested, please let me know via the comments below. Comments are encouragement. 🙂

In this blog post, I just outline which AWS services do what in a typical MFT workflow. In future blog posts, I’ll get into some more nitty-gritty details, like provisioning and security. If you have specific areas you’d like detailed, just let me know.

All of the usual AWS and cloud goodness applies here, including scalability, AWS management of public-facing MFT endpoints and pay-as-you-go. In addition, by orchestrating several AWS services together, MFT workflows can be easily created, modified and managed, all using a true event-driven architecture.

From left to right in the drawing above:

- An AWS Transfer Family SFTP server accepts inbound SFTP transfers, preferably by SSH key only, from an S3 bucket. AWS Transfer Family allows IAM “step-down” policies that limit SFTP users to specific buckets and to specific keys in those buckets. In practice, I used a bucket-per-orchestration design.

- Because S3 is “hard-wired” to AWS EventBridge, new objects’ arrival can directly trigger a

PutObjectrule. Optionally, the S3 bucket is versioned with a lifecycle policy to preserve and, eventually, archive inbound data. The EventBridge event’s target is an AWS Step Functions state machine. - The state machine triggers AWS Glue jobs, Glue triggers and Lambda functions as necessary to accomplish an MFT workflow. In this example, AWS Redshift is the target output for this workflow. State machines can optionally wait for a process to complete making it possible to validate the output of one orchestration step before starting another.

There are many moving parts here — configuring the AWS SFTP server, setting up IAM policies and configuring the state machine to run Glue routines, among others.

But the central idea is an old one: service orchestration. And the key here is AWS Step Functions, which has a graphical orchestration builder in which one can graphically build complex workflows.

The bottom line is that by letting AWS Transfer Family handle the internet-facing side of SFTP transfers and coupling that to the internal needs of an MFT workflow using orchestration, enterprises can achieve vastly better security, scalability and customization compared to old-school, monolithic managed file transfer products.

Once again, if you have specific feedback about which parts of this design you’d like to see expanded on in future blog posts, let me know in the comments.

Leave a Reply